From insurance coverage assessments to diagnosing intensive care patients to finding new drug treatments, many decisions amid the global health crisis are being made with the input of intelligent software. Humans may still be the final decision-makers, but machine-learning algorithms can find patterns and connections that human minds cannot.

As public health officials and the tech sector have strategized coordinated responses to COVID-19, AI-enabled interventions have played a central role. Predictive software that can forecast outbreaks before they occur, technology for monitoring physical distancing, and Bluetooth-based contact tracing apps have all emerged as possible measures for combating the continued spread of the virus. However, the potential mass implementation of these tools poses important questions concerning the fairness and transparency of their decision-making.

Human decisions are auditable and interpretable. They are written into legal codes, expert systems and formal logic algorithms. It is possible to challenge them when we feel those decisions may be wrong. But trusting and challenging decisions made by machine learning-based artificial-intelligence systems is more difficult. We often call such systems black boxes — they use proprietary code, or their computations are so complex that even the scientists who created them aren’t able to understand or see their errors or the reasoning that led to their conclusions.

“No matter how smart or complex machine learning models get, they have to have some way of communicating to people.”

This challenge has led to the emergence of a field of AI research called explainability that is located at the intersection of machine learning, social science and design. Explainability experts address AI’s black box problem by producing models that are either more transparent or can explain their reasoning processes so that human users can understand, appropriately trust and effectively manage the myriad AI systems that weigh in on human affairs. Explainability models offer a view into how machines reason and why they are sometimes wrong. They are also a vital aspect of government regulations of AI systems. Canada, the European Union and the United Kingdom, for example, have ruled that individuals who have automated decisions made about them by the government have the right to an explanation.

“No matter how smart or complex machine learning models get, they have to have some way of communicating to people,” Bahador Khaleghi, a former colleague of mine who is currently a data scientist at H2O.ai, told me recently. “Otherwise, the analogue would be like a genius who won’t speak.” Khaleghi’s concerns are reflected in a broader set of conversations about what role explainability should play in ensuring regulatory compliance, detecting bias, debugging and enhancing machine learning models, and building protections against adversarial techniques or attempts to fool the models through malicious inputs.

Machine learning models, such as artificial neural networks, have proven extremely successful at tasks such as speech and object recognition. But how they do so remains largely a mystery. Artificial neural networks simulate the biological composition of the human brain, allowing computers to “learn” without being explicitly programmed. Such networks use mathematical calculations localized in artificial neurons that are organized into layers to process information. An initial input layer communicates data (images, market data, credit histories) to one or more “hidden layers” where processing is done. It is then linked to an output layer that produces anything from an image label to a prediction on stock market movements to a decision on whether or not someone should receive a loan. The network is trained and validated with a set of labeled data. If its predictions are incorrect, it adjusts its calculations, “learning” to improve its accuracy.

Types Of Explainability

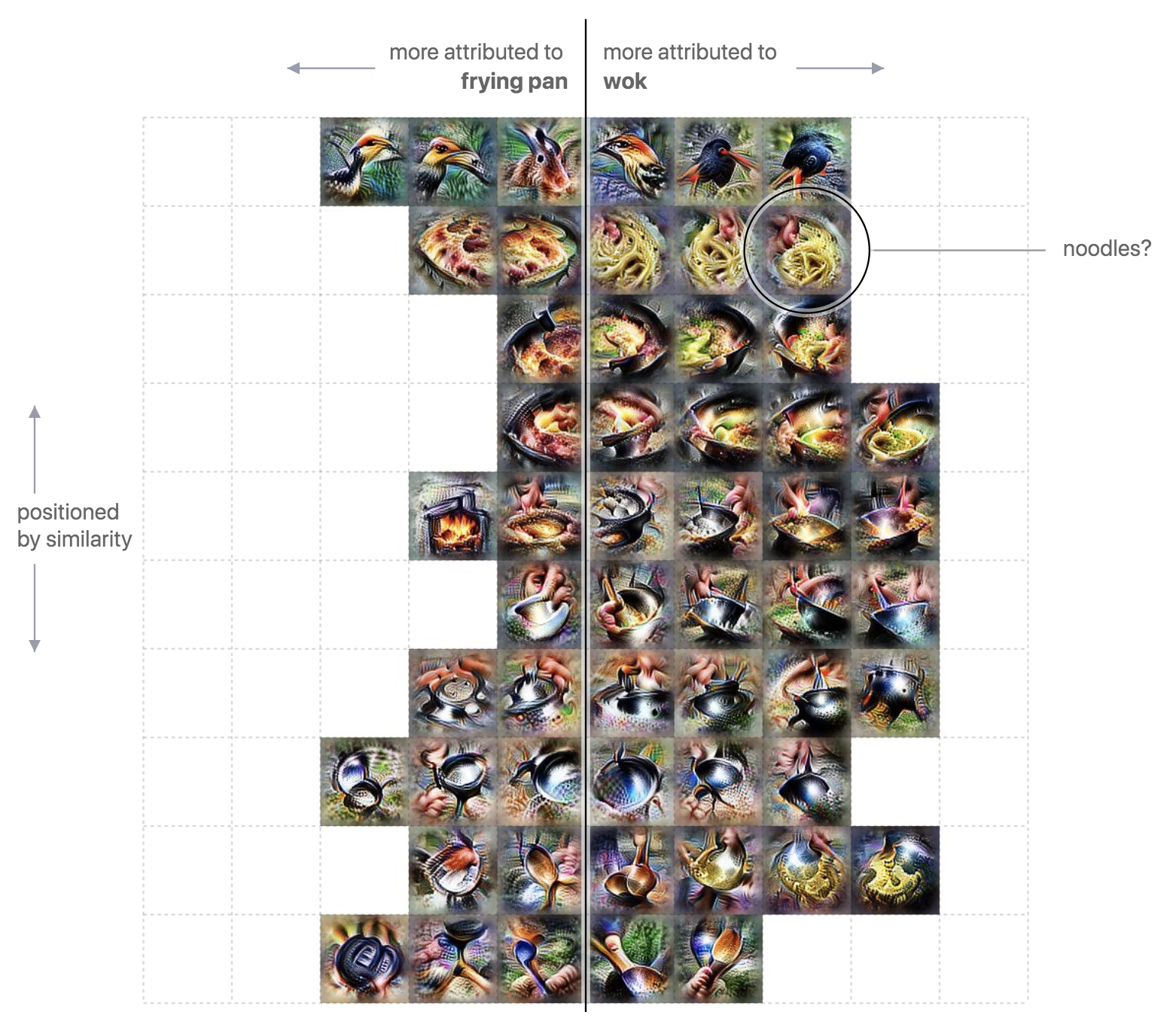

Several novel explainability techniques have emerged recently to allow artificial neural networks to explain their reasoning to humans. Activation atlases, developed by Open AI and Google last year, are one means of visualizing how black box computer algorithms classify images. Activation atlases build on feature visualization, a technique that studies what a model’s hidden layers represent by grouping artificial neurons that are activated when a model processes images.

The method can be useful in identifying subtle errors in machine learning. One such visualization revealed that a model that was trying to distinguish between woks and frying pans was mistakenly correlating noodles with woks, rather than identifying more representative features of a wok, like a deep bowl or short handle.

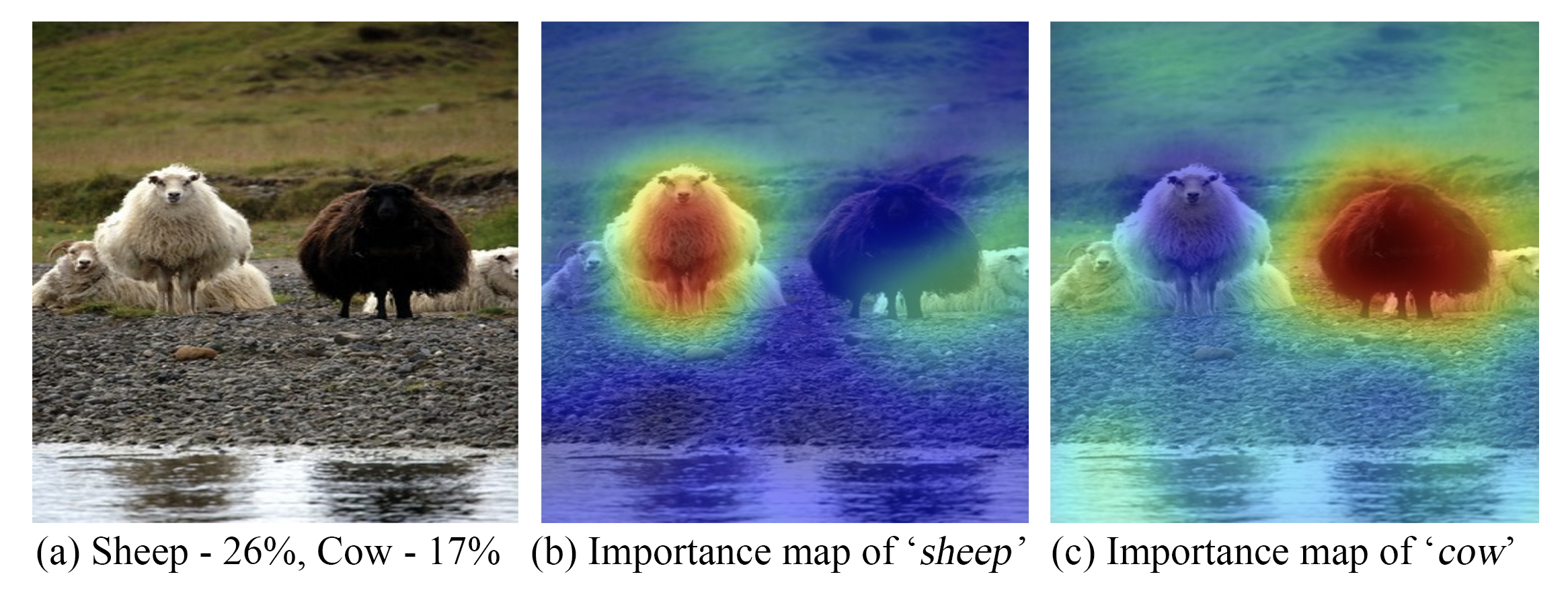

Saliency maps are another way of getting AI systems to at least partially explain their decisions. The RISE method produces a saliency map or a heat map that highlights which parts of an image were most important to its classification.

When you feed a photo into an image classifier, it will return a set of labels along with associated weights or levels of certainty. RISE will then generate a heatmap that shows how much each of the pixels in the image contributed to its classification. In the example below, RISE was able to show that one model that examined a picture of a white sheep and a black one determined the white sheep to be important in its classification of the object as a sheep and determined that the black one was likely a cow, probably due to a scarcity of black sheep in its training data set.

Counterfactual explanations, another method used to explain the outputs of machine learning models, reveal the minimal changes required to the input data to obtain a different result, effectively making it possible to understand how an algorithmic decision would have changed if certain initial conditions were different. Such explanations could be particularly well-suited to decisions that are based on a variety of data inputs and subject to legal requirements for explanations to prevent discrimination. For example, an individual who is denied a loan might get an explanation that a higher income or different zip code would have changed their eligibility. Here’s a hypothetical interaction:

Algorithm: “Your loan application was denied.”

Applicant: “Why?”

Algorithm: “If you earned $1,000 a month instead of $750, it would have been accepted.”

Applicant: “Disregarding my income and employment type, what can I do to get the loan?”

Algorithm: “You already have two loans. If you pay them back, you will get this loan.”

In the absence of clear auditing requirements, it will be difficult for individuals affected by automated decisions to know if the explanations they receive are in fact accurate or if they’re masking hidden forms of bias.

“The analogue would be like a genius who won’t speak.”

Regulatory Guardrails

Until a broader system of third-party audits comes into being, regulators will need to set limits on how algorithms can be used for decisions made about people. For example, Canada’s directive on automated decision-making limits automated decisions without direct human involvement to low-stakes decisions — those that will have little to moderate impact on the rights, health or economic interests of individuals and communities. And Canada appears likely to tighten regulations further. Two policies are currently under consideration — one that would extend the current right-to-an-explanation policy by granting individuals the right to explanations of automated decisions made by all entities (not just the government) and another that would grant the right to not be subject to decisions based solely on automated processing.

Hierarchies of accountability will also need to be established as AI begins to play a more prominent role in augmenting human decisions of all kinds. Contemporary automated systems, such as those used for automated flight, are already characterized by complex and distributed forms of control between humans and machines. This will only intensify with AI-powered systems, which, unlike older automated systems, still lack robust regulatory regimes and can therefore place users at risk.

For example, driverless cars require human drivers to be alert and present at the wheel to collaborate with artificially intelligent systems, though the immediate handover required when a system is unable to manage a situation means that drivers aren’t left with sufficient time to switch tasks safely. The spheres of responsibility of the designers, human users and service vendors of AI systems need to be clearly defined so that we know who is responsible when one fails.

Algorithm: “Your loan application was denied.” Applicant: “Why?”

Moreover, we will need to better understand how human and machine intelligences differ from one another. For example, a 2018 study showed that unlike humans, some deep learning computer vision algorithms rely more on textures than shapes to identify images. To such algorithms, a cat’s fur is more important to its classification as a cat than its characteristic shape, which tends to be the primary reference point for humans. When images of cats were filled in with elephant skin, the algorithms identified them as elephants rather than cats.

The dataset used to train an AI system also sets the terms by which it sees the world. This means that its “background knowledge” may differ from that of a human collaborator and is necessarily limited, as Sana Tonekaboni, a PhD student in the department of computer science at the University of Toronto, told me recently. Tonekaboni is developing a machine learning model to help doctors predict cardiac arrests in intensive care units. “Model inputs are limited to physiological data, such as heart rate and so on,” she said. “If a patient comes into the ER expressing suicidal impulses” — through their speech or behavior or in other ways that are typically not captured by machine-based monitoring converted into patient data — “a model obviously cannot factor that into its prediction.”

How To Trust Machines That Think

Given these differences in background knowledge, it is important to consider how humans trust and develop their understandings of the way AI systems think — referred to by Jennifer Logg, an assistant professor of management at Georgetown University, as “theory of machine.” Logg’s research suggests that trust in an AI system depends in part on a user’s estimation of their own ability and expertise in a given area. Lay people report that they’d trust algorithms more than they trust themselves to make decisions about “objectively” observable phenomena — an estimate of a person’s weight based on a photo, for example. But trained experts, such as those working in the field of national security, report that they’d trust their own assessments more.

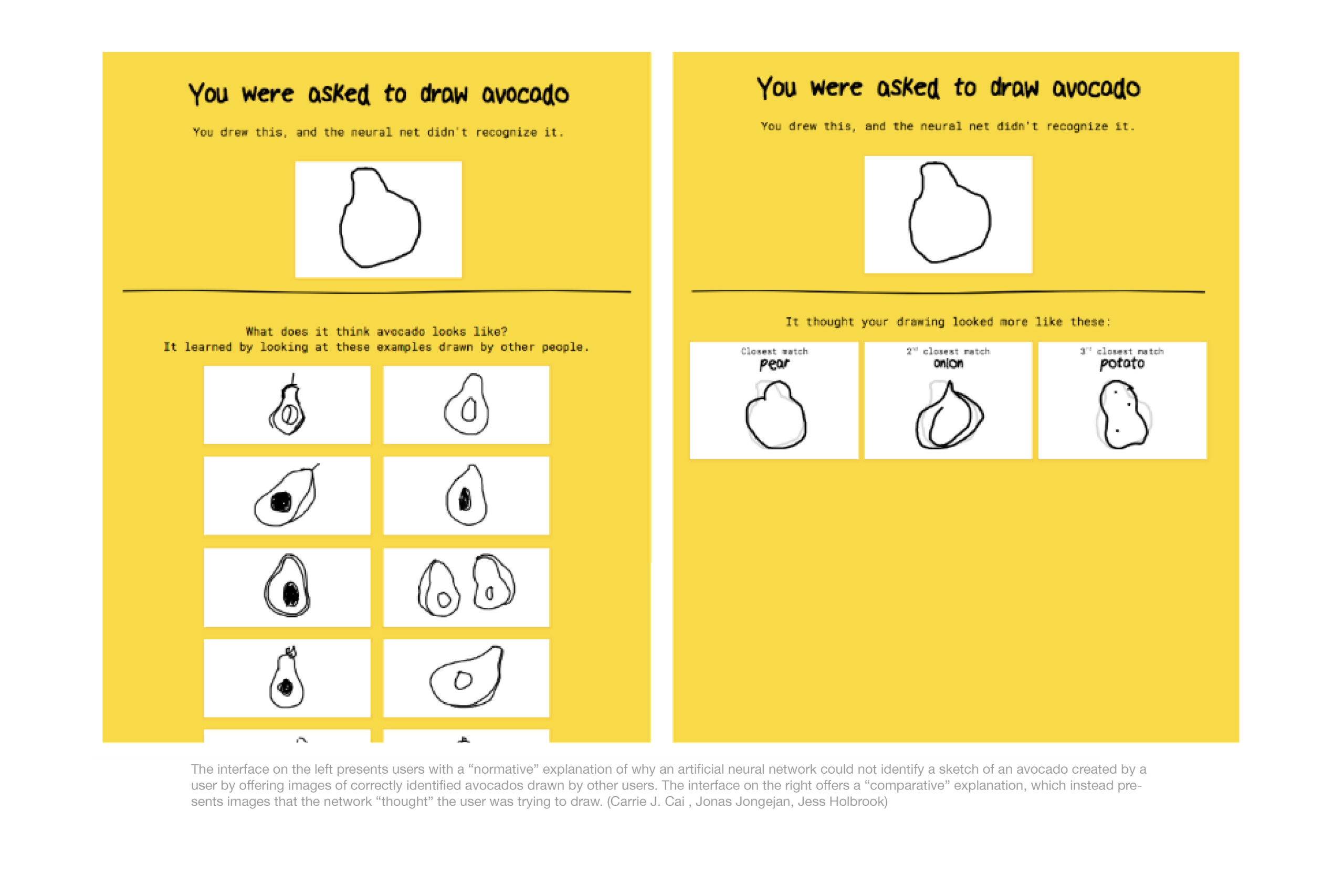

Insights from the fields of behavioral economics and user experience research shed further light on how AI-enabled, collaborative decision-making requires renewed attention to the problem of how humans understand and engage with intelligent machines. Carrie Cai, a researcher at Google Brain, has found that different kinds of explanations can lead users to come to different conclusions about algorithmic ability. Cai and her colleagues presented users with different interfaces in Quick Draw, an artificial neural network that aims to identify simple user sketches. If the network couldn’t identify the sketch — an avocado, for example — it offered two kinds of explanations to the user groups. “Normative” explanations showed the user images of other avocados that the model did correctly identify, while “comparative” explanations showed the user images of similarly shaped objects, like a pear or potato.

Users receiving normative explanations tended to trust the artificial neural network more: They reported having a better understanding of the system and perceived it to have higher capability. Users presented with comparative explanations, on the other hand, rated the network as having lower capability but also as being more “benevolent.” Cai and her colleagues propose that this was because such explanations exposed the network’s limitations and may have led to surprise but also because they served as evidence that the network was “trying,” despite its failures.

Cai’s research shows how explainability methods are also highly dependent on interface designs that can be adjusted to influence a user’s trust in an intelligent machine. This means that designers may exert significant influence over how human users develop their “theory of machine.” If users are shown only normative explanations, for example, they may develop an impression of an artificial neural network being more capable than it really is by hiding its blind spots and causing the user to trust it more than may be warranted. Again, regulations and third-party audits of AI systems and explainability methods may be useful here in avoiding such scenarios.

Building trust in intelligent machines is a complex process that requires inputs from multiple groups — explainability experts, regulatory bodies and human-AI interaction researchers. Better understanding how AI systems “reason” is a necessary step to bridging the gap between human and machine intelligence. It’s also crucial to managing expectations about the role AI should play in decision-making.